Last year, I posted about how busy we were around OpenStack Summit Paris and even after 365 days, we’re still keeping busy. While one blog post isn’t enough space to cover everything we’ve been doing, I thought it would be nice to at least highlight some of the key projects. So let’s begin!

Akanda, the startup we spun off, has really taken over the full management of our virtual routing solution. It has been updated to work with OpenStack Kilo and is being maintained on master. They have also made some key hires to help ensure the success of Akanda. From the DreamCompute perspective, this has allowed us to spend less cycles on patching Akanda to work on what we’ve deemed “Franken-Juno” (our custom patches on top of OpenStack Juno) and has freed us up to work on making our cluster more stable and user friendly. Since we are Akanda’s most important (only?) customer, we have a strong influence on their priorities. DreamCompute is currently working on an upgrade to Kilo which has required us to lean on Akanda for some support. After we clear the Kilo hurdle, we will likely be asking Akanda for a VPNaaS solution. We’re also considering how we can make things more user-friendly when spinning up VMs by automatically assigning a public IPv4 address (should a customer want such a thing), which will require some coordination with Akanda. There is plenty of work to do on this front, but we have a lot of moment in what seems to be a good direction.

Akanda, the startup we spun off, has really taken over the full management of our virtual routing solution. It has been updated to work with OpenStack Kilo and is being maintained on master. They have also made some key hires to help ensure the success of Akanda. From the DreamCompute perspective, this has allowed us to spend less cycles on patching Akanda to work on what we’ve deemed “Franken-Juno” (our custom patches on top of OpenStack Juno) and has freed us up to work on making our cluster more stable and user friendly. Since we are Akanda’s most important (only?) customer, we have a strong influence on their priorities. DreamCompute is currently working on an upgrade to Kilo which has required us to lean on Akanda for some support. After we clear the Kilo hurdle, we will likely be asking Akanda for a VPNaaS solution. We’re also considering how we can make things more user-friendly when spinning up VMs by automatically assigning a public IPv4 address (should a customer want such a thing), which will require some coordination with Akanda. There is plenty of work to do on this front, but we have a lot of moment in what seems to be a good direction.

For a more DreamCompute centric update: we’re priming for new hardware, an upgrade to Kilo, and a network architecture overhaul. All of these tasks really go hand-in-hand. The key driver here is that our current network architecture relies on a piece of software that has not been performing up to our expectations/needs. As such, we need to migrate off of it in a graceful manner that is not too disruptive to our current users. As of today, we are also using about 75% of our available CPUs with about 3451 vCPUs provisioned and we’re using about 60% of our available RAM with 4172GB of RAM provisioned. This resource consumption comes from about 2500 VMs. Since we are swapping out a very important part of the network stack, we want to make sure that it scales to our needs. We have decided to build out an entirely new cluster with new Cumulus (linux based) switches and new hardware with better CPUs in them. We are currently expecting to make this new cluster available to customers to migrate to at their own will. There is no live-migration available in this scenario, but we’re hoping that better hardware performance and network stability will be enough incentive to move customers to the new setup.

Finally, OpenStack Summit Tokyo is just around the corner. In Vancouver, DreamCompute gave a talk about our “COCO” stack (Ceph, Openstack, Cumulus, Overlay), which is a cute name for our architectural decisions. We also gave a talks in Paris at the OpenStack summit prior to Vancouver. It is a core value of ours to participate in the open source communities, so we put high value on these sorts of things. To my knowledge, we don’t have any talks lined up yet for OpenStack Tokyo but given our history, I would be surprised if we don’t have something prepared. Also, and as a segue to my last point, I will be making the trip to Tokyo!

OpenStack Tokyo will be my first trip outside of North America. To be honest, I’m really not looking forward to the 14 hour plane flight. But I’m excited to experience the Japanese culture and meet some of the OpenStack people I’ve been working with face-to-face. One pain point of OpenStack is the deployment and recent activity has spurred some more momentum in for a group called OpenStack-Operators. I’ve recently started participating in this groups mailing-lists and plan to start attending the weekly meetings. It will be nice to have a strong operators presence at the summit and hopefully we can smooth out some of the rough edges when it comes to deployment.

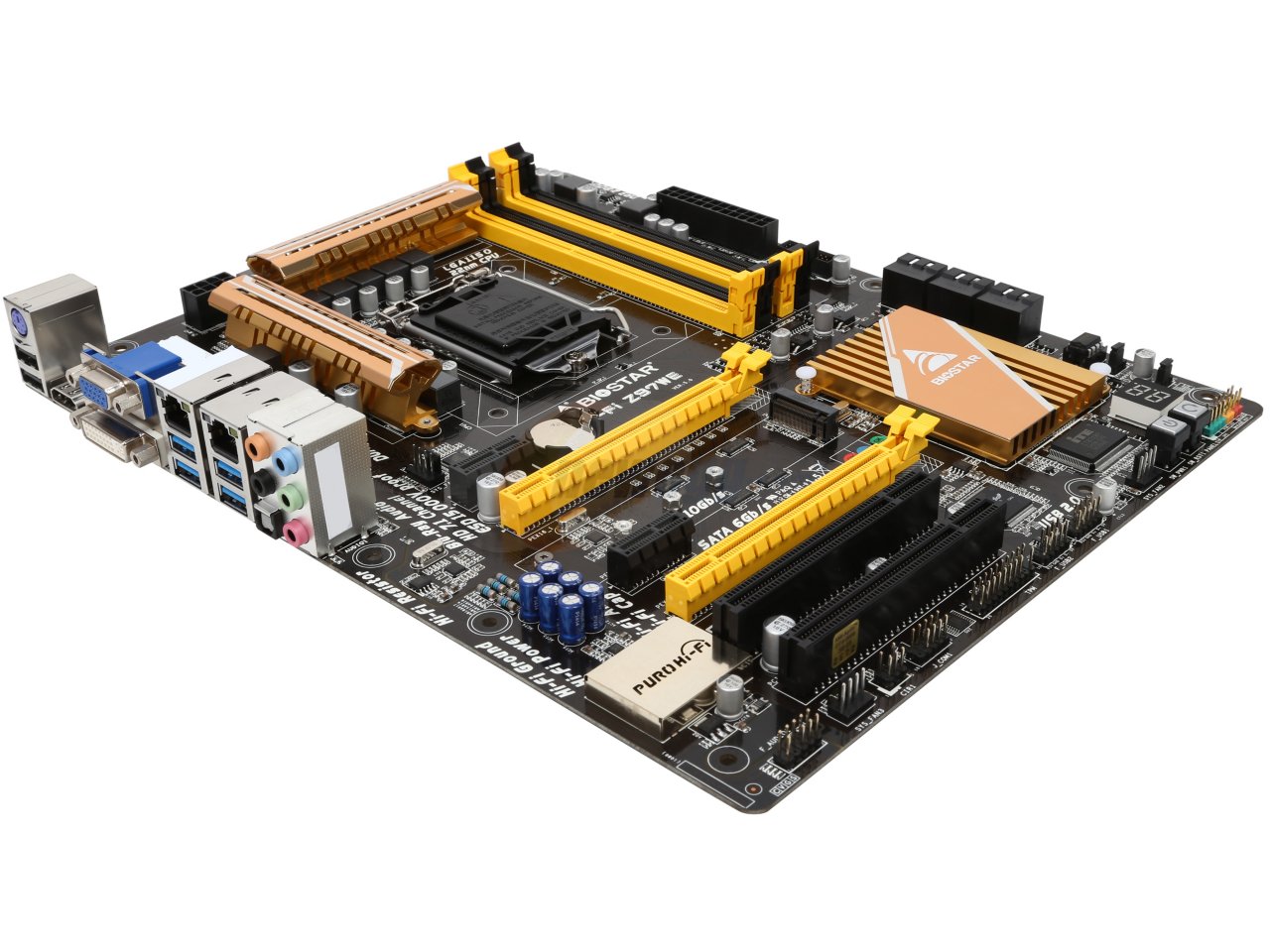

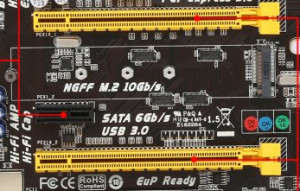

What I hadn’t noticed though was that the motherboard I chose (

What I hadn’t noticed though was that the motherboard I chose (